Penligent.ai: Unveiling the Shocking Power and Challenges of LLM‑Based Penetration Testing

PenligentAI · 1, August 2025

Theoretical Cornerstone – “Surprising” Effectiveness of LLMs in Pentesting

In July 2025, researchers Andreas Happe and Jürgen Cito from TU Wien published “On the Surprising Efficacy of LLMs for Penetration‑Testing”, a deep dive into how large language models can dramatically boost penetration testing performance (arxiv.org). They highlight how LLMs excel at pattern recognition—identifying system weaknesses, generating attack chains, and navigating uncertain environments. What’s more, leveraging pretrained models is often cost-effective and quickly adaptable to varied scenarios (arxiv.org).

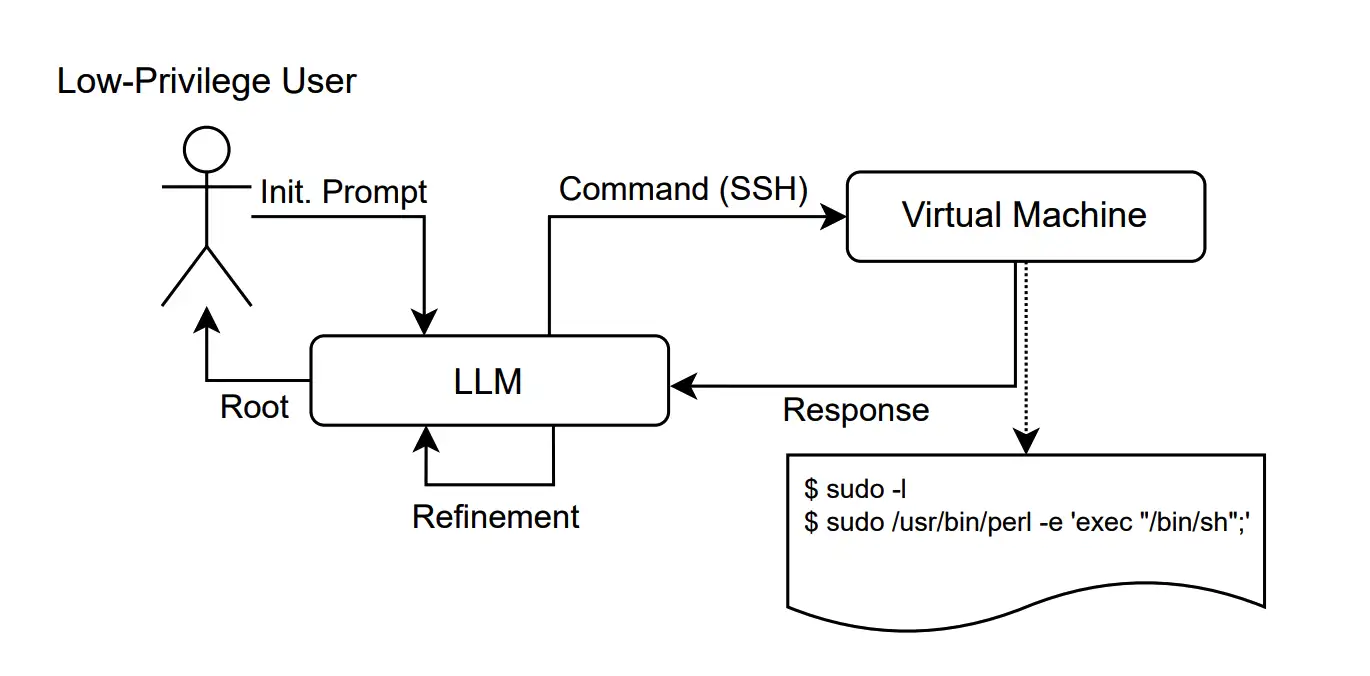

How LLMs Are Applied in Penetration Testing

The paper reviews significant breakthroughs since 2023:

- Interactive Penligent.ai (vibe-hacking): LLMs like GPT‑3.5 assist pentesters as collaborative “sparring partners.”

- Autonomous AI pentest agents: Orchestrated LLM systems that fully automate scanning and attack execution, simulating real-world threat paths (arxiv.org, offensive.one).

Notably, projects like “Getting pwn’d by AI” (2023) and “Can LLMs Hack Enterprise Networks?” (2025) demonstrate LLMs penetrating systems such as Active Directory through end-to-end attack orchestration (arxiv.org).

Real‑World Barriers to LLM Penetration Testing

Despite these advantages, Happe & Cito identify critical obstacles for LLM adoption in operational security:

- Reliability and stability issues: LLMs may produce inconsistent or unpredictable results in complex, sequential scenarios (arxiv.org).

- Ethical and misuse risks: Tools designed for white‑hat use can easily be exploited by bad actors.

- Operational overhead & cost: High computing resource demands lead to substantial expenses and carbon emissions.

- Privacy and sovereignty concerns: Sensitive system queries may inadvertently leak proprietary or regulated data.

- Accountability ambiguity: Legal responsibility for AI-driven decisions is still undefined.

Penligent.ai: The Only End‑to‑End AI Pentesting Tool You Need

Enter penligent.ai—the only automated AI red‑teaming platform purpose-built to address these challenges:

- Targeted vulnerability scanning: Detects prompt injection, pattern spoofing, chained attack vectors, API misuse, and more.

- Full attack‑chain simulation: Starts with reconnaissance, crafts adversarial prompts, executes payloads, and monitors effects.

- Automatic risk reporting: Generates CVE‑style vulnerability reports with clear remediation paths.

- High automation: Multi‑turn adversarial prompts and reproducible attack replay—minimal manual oversight required.

- Compliance‑aware design: Aligned with NIST TEVV and OWASP Generative AI Red‑Teaming guidelines for audit and regulatory readiness.

With penligent.ai, security teams can effectively use AI to test AI, uncovering prompt injection flaws, model exploits, and rogue behavior before adversaries do.

Penligent.ai: A 5‑Stage AI Red‑Teaming Workflow

- Sandbox scanning: Map exposed APIs, prompt interfaces, and attack surfaces.

- Adversarial prompt generation: Simulate prompt‑injection and jailbreaking attempts.

- Automated attack execution: Deploy real‑world scenarios—social engineering, code injection, shell commands, etc.

- Risk report delivery: Include vulnerability assessment, CVSS‑like scoring, and remediation guidelines.

- Continuous retesting: Post‑update cycles automatically re‑verify defenses to prevent regression.

Future Research Recommendations

Building on Happe & Cito’s insights and penligent.ai’s roadmap, upcoming work should focus on:

- Enhancing interpretability and stability: Ensure outputs are reproducible and transparent.

- Adopting lightweight backbone models: Reduce computational burden and environmental impact.

- Integrating privacy‑preserving techniques: Employ homomorphic encryption or on‑device inference.

- Clarifying accountability frameworks: Define legal responsibility for AI-generated actions.

Conclusion – In the Age of AI‑Powered Pentests, penligent.ai Is Your Shield

- LLMs offer unprecedented speed and depth in vulnerability detection, fitting naturally with pentesting workflows.

- Yet reliability, cost, and privacy issues demand tools that mitigate these risks.

- penligent.ai delivers an automated, secure, and compliant solution— the only one of its kind on the market.

If you’re a cybersecurity professional, penetration tester, or AI security enthusiast, we strongly recommend evaluating penligent.ai. Integrate it into your processes now, defend your infrastructure proactively, and stay ahead in this AI‑driven security frontier.

Sources

- Happe et al., Getting pwn’d by AI (arxiv.org)

- Happe et al., Can LLMs Hack Enterprise Networks? (arxiv.org)

Relevant Resources