💬 ChatGPT and the Security Crossroads: Bridging the Gap in the Age of AI-Driven Development

PenligentAI · 30, July 2025

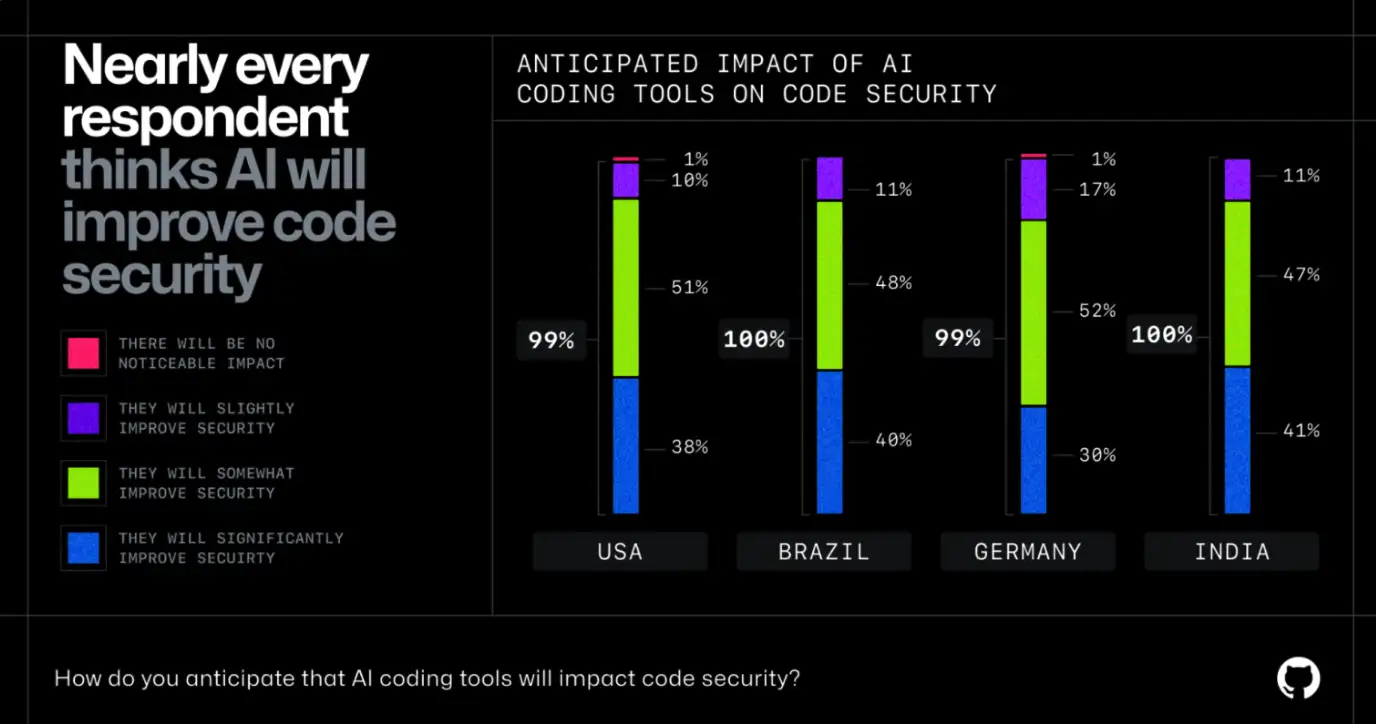

Artificial Intelligence is rewriting the rules of software development—and security is at a turning point. With the meteoric rise of tools like ChatGPT, GitHub Copilot, and agent-based frameworks, development is faster and more scalable than ever. But the security debt, blind spots, and technical risks are scaling just as fast.

In this AI-native era, do we continue trailing behind—treating security as an afterthought? Or do we finally embed it, with precision, into the DNA of development?

From GitHub Copilot to ChatGPT: The Velocity–Vulnerability Tradeoff

Generative AI is now mainstream. According to Google’s 2024 DORA report, over 75% of developers rely on AI code assistants. NVIDIA's CEO famously remarked that “kids no longer need to learn to code.” Google claims 25% of its code is now AI-written. And startups funded by YCombinator report up to 95% AI-generated code coverage in recent batches.

That velocity is intoxicating. AI lets developers build and ship faster—up to 50% gains in throughput. But as AI-assisted commits scale, so do the risks.

Research from BaxBench shows that 62% of AI-generated code contains vulnerabilities, while another study highlights that 29.6% of AI outputs exhibit security weaknesses. Meanwhile, developers overestimate AI code quality—often skipping reviews, and shipping insecure code to production.

This "trust the model" mindset creates a widening security gap—exacerbated by the reuse of vulnerable AI-generated snippets to train future models. A feedback loop of flawed logic, now embedded into the core of software ecosystems.

The Vibe Coding Dilemma: When UX Kills Security

A major symptom of this shift is the rise of Vibe Coding—an AI-powered workflow where devs "describe what they want" and get full code from the model, skipping syntax and structure. It's fast. It’s fun. But it’s often dangerous.

A Vibe Coder might ask ChatGPT:

"Write Python to upload a file to S3."

And it responds—with access keys hardcoded into the snippet.

s3 = boto3.client('s3',

aws_access_key_id='AKIA123456789EXAMPLE',

aws_secret_access_key='abc123verysecretkey')

That’s a textbook security flaw: secrets leakage, cloud infrastructure compromise, and irreversible Git exposure. Yet this is what vibe coders ship every day.

This is not a Copilot problem. It’s an architecture problem. One that requires new safeguards to operate at AI speed.

Why Traditional Security Can't Keep Up

Security teams have tried shifting left, bolting on SAST, DAST, IaC scanners, SBOMs, and container tools to CI/CD pipelines. But those tools were never designed for conversational coding. They're static, context-blind, noisy—and typically ignored by devs.

Even DevSecOps, intended to bridge the dev–security gap, has become a checklist ritual, not a real integration.

Meanwhile, the attack surface keeps growing. The CVE count surged 48% in 2024 alone (source). More apps, more code, more automation = more vulnerabilities. And no human security team can scale fast enough.

MCP and LLM Protocols: New Attack Surfaces

Modern development isn’t just AI-generated—it’s AI-orchestrated. New protocols like Model–Context Protocol (MCP) or Agent2Agent let LLMs connect directly with APIs, databases, and infrastructure.

But what happens when a model interprets a user message as a command? That’s exactly how recent Supabase + MCP integrations leaked entire databases—with the LLM accidentally executing instructions embedded inside a "support request." (source)

No malware. No exploit. Just prompt confusion—and full SQL exfiltration.

These new AI-native surfaces require AI-native defense. And that’s where Penligent enters.

Penligent: Intelligent Pentesting for an AI-First World

Unlike legacy scanners, Penligent is an AI-native penetration testing platform purpose-built for modern workflows. It actively tests, fuzzes, and audits AI agent behavior—across coding environments, prompt interfaces, and automated workflows.

With Penligent, developers and AppSec teams can:

- Simulate AI agent misuse scenarios like prompt injection or model hijacking

- Detect LLM-aware misconfigurations in tools like Cursor, Copilot, and custom MCP setups

- Perform full-scope AI-aware pentests—covering prompt chains, vector databases, auth boundaries, and inter-agent protocols

- Auto-generate and execute red team scripts in real time, tailored to the app's AI integrations

- Deliver compliance-ready vulnerability reports, backed by reproducible AI attack chains

Whether you’re building with OpenAI APIs or deploying agent stacks with LangChain, Penligent delivers continuous assurance—by testing like an attacker, not guessing like a scanner.

Rethinking Security in the ChatGPT Age

The security landscape is no longer just about patching code—it’s about understanding how models think, how agents act, and how vulnerabilities hide in the gray space between data and intent.

As agent ecosystems evolve and protocols like MCP proliferate, organizations need more than shift-left slogans. They need AI-native red teaming, context-aware code reviews, and dynamic feedback loops—embedded into developer tools, not slapped on afterward.

Penligent isn’t just watching the AI shift. It’s leading it—bringing surgical precision to AI threat modeling and pentesting.

Related Reading

Want to see Penligent in action?

Explore Penligent.ai for demos, use cases, and the future of AI penetration testing.